When people talk about performance and scalability they very often use these two word synonymously. However they mean different things. As there is a lot of misunderstanding on that topic, I thought it makes sense to have a blog post on it.

One of the best explanations can be found here. It is a nice explanation by Werner Vogels the CTO of amazon. I think everybody agrees that he knows what he is talking about.

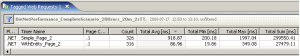

Performance refers to the capability of a system to provide a certain response time. server a defined number of users or process a certain amount of data. So performance is a software quality metric. Unlike to what many people think it is not vage, but can be defined in numbers.

If we realize that our performance requirements change (e.g. we have to serve more users, we have to provide lower response times) or we cannot meet our performance goals, scalability comes into play.

Scalability referes to the characteristic of a system to increase performance by adding additional ressources. Very often people think that there system are scalabable out-of-the-box. “If we need to server more users, we just add additional server” is a typical answer for performance problems.

However this assumes that the system is scalable, meaning adding additional resources really helps to improve performance. Whether your system is scalable or not depends on your architecture. Software systems not having scalability as a design goal often do not provide good scalabilty. This InfoQ interview with Cameron Purdy – VP of Development in Oracle’s Fusion Middleware group and former Tangosol CEO – provides a good example on limited scalability of a system. There are also two nice artilces by Sun’s Wang Yu on Vertical and Horizontal Scalabilty.

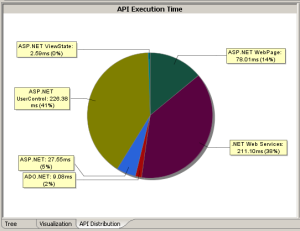

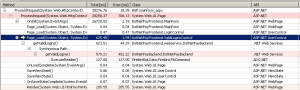

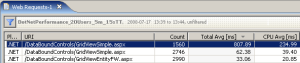

So how does this relate to dynaTrace. With Lifecycle APM we defined an approach how to ensure performance and scalability over the application lifecycle – from development to production. We work with our customers to make performance management part of their software processes going beyond performance testing and firefighting when there are problems in production.

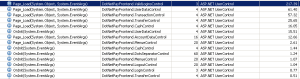

As scalabilty problem are in nearly all cases architectural problems, these charateristcs have to be tested already in the development phase. dynaTrace provides means to integrate and automate performance management in your Continuous Integration Environments.

When I talk to people I sometimes get the feedback “… isn’t that premature optimization” (have a look at the cool image on premature optimization in K. Scott Allen’s Blog). This is a strong misconception. Premature optimization would mean that we always try to do performance optimization whenever and wherever we can. Lifecycle APM and Continuous Performance Management as the development part of it, targets to get all information to always know about the scalabilty and performance characteristcs of your application. This serves as a basis for deciding when and where to optimize; actually avoiding premature optimization in the wrong direction.

Concluding we can say that if we want our systems to be scalable we have to take this into consideration right from the beginning of development and also monitor throuhout the lifecycle. If we have to ensure it, we have to monitor it. This means that performance management must then treated equally relevant than the management of functional requirements.