ASP.NET offers a powerful GridView control that can be used to display data from different data sources, e.g.: SQLServer, LINQ, XML, … The control additionally supports features like paging, sorting and editing.

Visual Studio makes it very easy to use this control on your web page and to bind it to a data source like a SQL Server table. It only takes several drag&drop operations on your page and a few more clicks to configure which data you want to display and which features of the GridView you want to enable.

This easy-to-use approach is nice and it works well for many use case scenarios. From our experience we however know that easy-to-use and flexible components are not always concluding in high performance.

The Sample Implementation: 3 different ways to use a GridView control

I’ve created a sample ASP.NET Web Site with 3 different pages. Each page hosts a GridView control to display the content of the Northwind Products Table. I added 10000 additional product records in order to show a more real-life scenario than to query a table with only a few records.

The difference between the 3 pages is the underlying data source:

- The first page used a standard SQL Data Source

- The second page used the same SQL Data Source but enabled Data Caching for 5 seconds

- The third page used the new Entity Data Source.

All of the Grid Views support Paging, Editing, Selection and Deletion.

The Performance Test

I ran a simple load test on each of the individual pages. The workload was a 20 user load test over 5 minutes where each user executed the following actions:

- queried the initial page

- sorted the grid by product column

- clicked through 3 different grid pages

- sorted the product column again

The Performance Results

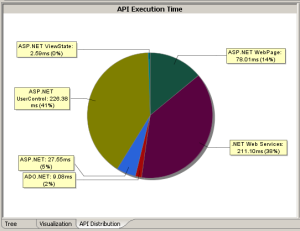

I used dynaTrace to analyze the individual load test requests in terms of their database access and rendering activities.

Grid with standard ADO.NET Binding

It turned out that a GridView with a standard SQL Data Source is ALWAYS selecting ALL rows (SELECT * FROM Products) of the underlying table – even if the PageSize is smaller than the actual result set.

Additionally – sorting is not done on the SQL Layer. The GridView is again retrieving ALL rows from the database (SELECT * FROM Products) and is then performing an In-Memory sort on the resultset. In my scenario – each page request executed a SQL Statement that returned more than 10000 rows although only 10 elements (that was the PageSize) were displayed.

Grid with standard ADO.NET Data Binding using Client Side Caching

Enabling Data Caching on the SQL Data Source of course limited the round trips to the database on my second page and therefore improved the overall performance. The Caching implementation is smart enough to already cache the sorted/paged data.

GridView with Entity Framework Data Source

Using the Entity Data Source on SQL Server turned out to be the best performing solution. Each page request actually resulted in two SQL Statements. One that queried that row identifiers to display based on the current page index and the other one to actually query ONLY THOSE rows that were actually displayed based on Page Index and Sorted Column. This scenario therefore limited the number of data that had to be retrieved from the database. Although more SQL Statements were executed on the database – the overall performance was improved by a large factor.

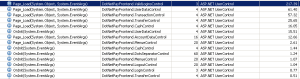

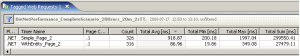

Following image shows the Web Requests that performed a request on the second data page. Once on the GridView with standard data binding and once using ADO.NET Entity Framework.

Web Requests to 2 different Grid Pages

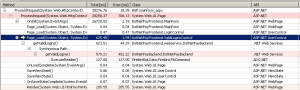

For each individual Web Request we can see the resulting SQL Statements. Simple ADO.NET Data Mapping executes a full table select on each page request:

Database View showing SQL Statements of ADO.NET Data Binding

ADO.NET Entity Framework executes statements that just retrieve the data that must be displayed. This implies more work on the database but less work in ASP.NET to render and filter the data:

Database View showing SQL Statments for ADO.NET Entity Framework Binding

The Analysis

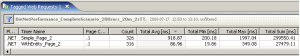

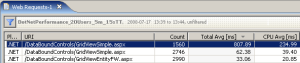

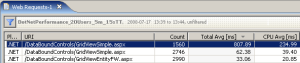

The following table compares individual measures from the 3 scenarios. We can see that twice as many requests could be handled by the page that used the Entity Data Source with only 1/10 of CPU usage and with an average response time that was 24 times faster compared to the SQL Data Source without caching.

Web Request View comparing all 3 Scenarios

The most critical performance impact in this scenario was that the SQL Data Source just requested TO MUCH data that had to be transfered from the database to the web applications. All this data than had to be processed where only a small part of it was actually displayed to the user.

Conclusion

ONLY REQUEST THE DATA THAT YOU NEED